- Docs Home

- About TiDB

- Quick Start

- Develop

- Overview

- Quick Start

- Build a TiDB Cluster in TiDB Cloud (Developer Tier)

- CRUD SQL in TiDB

- Build a Simple CRUD App with TiDB

- Example Applications

- Connect to TiDB

- Design Database Schema

- Write Data

- Read Data

- Transaction

- Optimize

- Troubleshoot

- Reference

- Cloud Native Development Environment

- Third-party Support

- Deploy

- Software and Hardware Requirements

- Environment Configuration Checklist

- Plan Cluster Topology

- Install and Start

- Verify Cluster Status

- Test Cluster Performance

- Migrate

- Overview

- Migration Tools

- Migration Scenarios

- Migrate from Aurora

- Migrate MySQL of Small Datasets

- Migrate MySQL of Large Datasets

- Migrate and Merge MySQL Shards of Small Datasets

- Migrate and Merge MySQL Shards of Large Datasets

- Migrate from CSV Files

- Migrate from SQL Files

- Migrate from One TiDB Cluster to Another TiDB Cluster

- Migrate from TiDB to MySQL-compatible Databases

- Advanced Migration

- Integrate

- Maintain

- Monitor and Alert

- Troubleshoot

- TiDB Troubleshooting Map

- Identify Slow Queries

- Analyze Slow Queries

- SQL Diagnostics

- Identify Expensive Queries Using Top SQL

- Identify Expensive Queries Using Logs

- Statement Summary Tables

- Troubleshoot Hotspot Issues

- Troubleshoot Increased Read and Write Latency

- Save and Restore the On-Site Information of a Cluster

- Troubleshoot Cluster Setup

- Troubleshoot High Disk I/O Usage

- Troubleshoot Lock Conflicts

- Troubleshoot TiFlash

- Troubleshoot Write Conflicts in Optimistic Transactions

- Troubleshoot Inconsistency Between Data and Indexes

- Performance Tuning

- Tuning Guide

- Configuration Tuning

- System Tuning

- Software Tuning

- SQL Tuning

- Overview

- Understanding the Query Execution Plan

- SQL Optimization Process

- Overview

- Logic Optimization

- Physical Optimization

- Prepare Execution Plan Cache

- Control Execution Plans

- Tutorials

- TiDB Tools

- Overview

- Use Cases

- Download

- TiUP

- Documentation Map

- Overview

- Terminology and Concepts

- Manage TiUP Components

- FAQ

- Troubleshooting Guide

- Command Reference

- Overview

- TiUP Commands

- TiUP Cluster Commands

- Overview

- tiup cluster audit

- tiup cluster check

- tiup cluster clean

- tiup cluster deploy

- tiup cluster destroy

- tiup cluster disable

- tiup cluster display

- tiup cluster edit-config

- tiup cluster enable

- tiup cluster help

- tiup cluster import

- tiup cluster list

- tiup cluster patch

- tiup cluster prune

- tiup cluster reload

- tiup cluster rename

- tiup cluster replay

- tiup cluster restart

- tiup cluster scale-in

- tiup cluster scale-out

- tiup cluster start

- tiup cluster stop

- tiup cluster template

- tiup cluster upgrade

- TiUP DM Commands

- Overview

- tiup dm audit

- tiup dm deploy

- tiup dm destroy

- tiup dm disable

- tiup dm display

- tiup dm edit-config

- tiup dm enable

- tiup dm help

- tiup dm import

- tiup dm list

- tiup dm patch

- tiup dm prune

- tiup dm reload

- tiup dm replay

- tiup dm restart

- tiup dm scale-in

- tiup dm scale-out

- tiup dm start

- tiup dm stop

- tiup dm template

- tiup dm upgrade

- TiDB Cluster Topology Reference

- DM Cluster Topology Reference

- Mirror Reference Guide

- TiUP Components

- PingCAP Clinic Diagnostic Service

- TiDB Operator

- Dumpling

- TiDB Lightning

- TiDB Data Migration

- About TiDB Data Migration

- Architecture

- Quick Start

- Deploy a DM cluster

- Tutorials

- Advanced Tutorials

- Maintain

- Cluster Upgrade

- Tools

- Performance Tuning

- Manage Data Sources

- Manage Tasks

- Export and Import Data Sources and Task Configurations of Clusters

- Handle Alerts

- Daily Check

- Reference

- Architecture

- Command Line

- Configuration Files

- OpenAPI

- Compatibility Catalog

- Secure

- Monitoring and Alerts

- Error Codes

- Glossary

- Example

- Troubleshoot

- Release Notes

- Backup & Restore (BR)

- TiDB Binlog

- TiCDC

- Dumpling

- sync-diff-inspector

- TiSpark

- Reference

- Cluster Architecture

- Key Monitoring Metrics

- Secure

- Privileges

- SQL

- SQL Language Structure and Syntax

- SQL Statements

ADD COLUMNADD INDEXADMINADMIN CANCEL DDLADMIN CHECKSUM TABLEADMIN CHECK [TABLE|INDEX]ADMIN SHOW DDL [JOBS|QUERIES]ADMIN SHOW TELEMETRYALTER DATABASEALTER INDEXALTER INSTANCEALTER PLACEMENT POLICYALTER TABLEALTER TABLE COMPACTALTER USERANALYZE TABLEBACKUPBATCHBEGINCHANGE COLUMNCOMMITCHANGE DRAINERCHANGE PUMPCREATE [GLOBAL|SESSION] BINDINGCREATE DATABASECREATE INDEXCREATE PLACEMENT POLICYCREATE ROLECREATE SEQUENCECREATE TABLE LIKECREATE TABLECREATE USERCREATE VIEWDEALLOCATEDELETEDESCDESCRIBEDODROP [GLOBAL|SESSION] BINDINGDROP COLUMNDROP DATABASEDROP INDEXDROP PLACEMENT POLICYDROP ROLEDROP SEQUENCEDROP STATSDROP TABLEDROP USERDROP VIEWEXECUTEEXPLAIN ANALYZEEXPLAINFLASHBACK TABLEFLUSH PRIVILEGESFLUSH STATUSFLUSH TABLESGRANT <privileges>GRANT <role>INSERTKILL [TIDB]LOAD DATALOAD STATSMODIFY COLUMNPREPARERECOVER TABLERENAME INDEXRENAME TABLEREPLACERESTOREREVOKE <privileges>REVOKE <role>ROLLBACKSELECTSET DEFAULT ROLESET [NAMES|CHARACTER SET]SET PASSWORDSET ROLESET TRANSACTIONSET [GLOBAL|SESSION] <variable>SHOW ANALYZE STATUSSHOW [BACKUPS|RESTORES]SHOW [GLOBAL|SESSION] BINDINGSSHOW BUILTINSSHOW CHARACTER SETSHOW COLLATIONSHOW [FULL] COLUMNS FROMSHOW CONFIGSHOW CREATE PLACEMENT POLICYSHOW CREATE SEQUENCESHOW CREATE TABLESHOW CREATE USERSHOW DATABASESSHOW DRAINER STATUSSHOW ENGINESSHOW ERRORSSHOW [FULL] FIELDS FROMSHOW GRANTSSHOW INDEX [FROM|IN]SHOW INDEXES [FROM|IN]SHOW KEYS [FROM|IN]SHOW MASTER STATUSSHOW PLACEMENTSHOW PLACEMENT FORSHOW PLACEMENT LABELSSHOW PLUGINSSHOW PRIVILEGESSHOW [FULL] PROCESSSLISTSHOW PROFILESSHOW PUMP STATUSSHOW SCHEMASSHOW STATS_HEALTHYSHOW STATS_HISTOGRAMSSHOW STATS_METASHOW STATUSSHOW TABLE NEXT_ROW_IDSHOW TABLE REGIONSSHOW TABLE STATUSSHOW [FULL] TABLESSHOW [GLOBAL|SESSION] VARIABLESSHOW WARNINGSSHUTDOWNSPLIT REGIONSTART TRANSACTIONTABLETRACETRUNCATEUPDATEUSEWITH

- Data Types

- Functions and Operators

- Overview

- Type Conversion in Expression Evaluation

- Operators

- Control Flow Functions

- String Functions

- Numeric Functions and Operators

- Date and Time Functions

- Bit Functions and Operators

- Cast Functions and Operators

- Encryption and Compression Functions

- Locking Functions

- Information Functions

- JSON Functions

- Aggregate (GROUP BY) Functions

- Window Functions

- Miscellaneous Functions

- Precision Math

- Set Operations

- List of Expressions for Pushdown

- TiDB Specific Functions

- Clustered Indexes

- Constraints

- Generated Columns

- SQL Mode

- Table Attributes

- Transactions

- Garbage Collection (GC)

- Views

- Partitioning

- Temporary Tables

- Cached Tables

- Character Set and Collation

- Placement Rules in SQL

- System Tables

mysql- INFORMATION_SCHEMA

- Overview

ANALYZE_STATUSCLIENT_ERRORS_SUMMARY_BY_HOSTCLIENT_ERRORS_SUMMARY_BY_USERCLIENT_ERRORS_SUMMARY_GLOBALCHARACTER_SETSCLUSTER_CONFIGCLUSTER_HARDWARECLUSTER_INFOCLUSTER_LOADCLUSTER_LOGCLUSTER_SYSTEMINFOCOLLATIONSCOLLATION_CHARACTER_SET_APPLICABILITYCOLUMNSDATA_LOCK_WAITSDDL_JOBSDEADLOCKSENGINESINSPECTION_RESULTINSPECTION_RULESINSPECTION_SUMMARYKEY_COLUMN_USAGEMETRICS_SUMMARYMETRICS_TABLESPARTITIONSPLACEMENT_POLICIESPROCESSLISTREFERENTIAL_CONSTRAINTSSCHEMATASEQUENCESSESSION_VARIABLESSLOW_QUERYSTATISTICSTABLESTABLE_CONSTRAINTSTABLE_STORAGE_STATSTIDB_HOT_REGIONSTIDB_HOT_REGIONS_HISTORYTIDB_INDEXESTIDB_SERVERS_INFOTIDB_TRXTIFLASH_REPLICATIKV_REGION_PEERSTIKV_REGION_STATUSTIKV_STORE_STATUSUSER_PRIVILEGESVIEWS

METRICS_SCHEMA

- UI

- TiDB Dashboard

- Overview

- Maintain

- Access

- Overview Page

- Cluster Info Page

- Top SQL Page

- Key Visualizer Page

- Metrics Relation Graph

- SQL Statements Analysis

- Slow Queries Page

- Cluster Diagnostics

- Search Logs Page

- Instance Profiling

- Session Management and Configuration

- FAQ

- CLI

- Command Line Flags

- Configuration File Parameters

- System Variables

- Storage Engines

- Telemetry

- Errors Codes

- Table Filter

- Schedule Replicas by Topology Labels

- FAQs

- Release Notes

- All Releases

- Release Timeline

- TiDB Versioning

- v6.1

- v6.0

- v5.4

- v5.3

- v5.2

- v5.1

- v5.0

- v4.0

- v3.1

- v3.0

- v2.1

- v2.0

- v1.0

- Glossary

Bookshop Example Application

Bookshop is a virtual online bookstore application through which you can buy books of various categories and rate the books you have read.

To make your reading on the application developer guide more smoothly, we present the example SQL statements based on the table structures and data of the Bookshop application. This document focuses on the methods of importing the table structures and data as well as the definitions of the table structures.

Import table structures and data

You can import Bookshop table structures and data either via TiUP or via the import feature of TiDB Cloud.

For TiDB Cloud, you can skip Method 1: Via tiup demo and import Bookshop table structures via the import feature of TiDB Cloud.

Method 1: Via tiup demo

If your TiDB cluster is deployed using TiUP or you can connect to your TiDB server, you can quickly generate and import sample data for the Bookshop application by running the following command:

If your TiDB cluster is deployed using TiUP or you can connect to your TiDB server, you can quickly generate and import sample data for the Bookshop application by running the following command:

tiup demo bookshop prepare

By default, this command enables your application to connect to port 4000 on address 127.0.0.1, enables you to log in as the root user without a password, and creates a table structure in the database named bookshop.

Configure connection information

The following table lists the connection parameters. You can change their default settings to match your environment.

| Parameter | Abbreviation | Default value | Description |

|---|---|---|---|

--password | -p | None | Database user password |

--host | -H | 127.0.0.1 | Database address |

--port | -P | 4000 | Database port |

--db | -D | bookshop | Database name |

--user | -U | root | Database user |

For example, if you want to connect to a database on TiDB Cloud, you can specify the connection information as follows:

tiup demo bookshop prepare -U root -H tidb.xxx.yyy.ap-northeast-1.prod.aws.tidbcloud.com -P 4000 -p

Set the data volume

You can specify the volume of data to be generated in each database table by configuring the following parameters:

| Parameter | Default value | Description |

|---|---|---|

--users | 10000 | The number of rows of data to be generated in the users table |

--authors | 20000 | The number of rows to be generated in the authors table |

--books | 20000 | The number of rows of data to be generated in the books table |

--orders | 300000 | The number of rows of data to be generated in the orders table |

--ratings | 300000 | The number of rows of data to be generated in the ratings table |

For example, the following command is executed to generate:

- 200,000 rows of user information via the

--usersparameter - 500,000 rows of book information via the

--booksparameter - 100,000 rows of author information via the

--authorsparameter - 1,000,000 rows of rating records via the

--ratingsparameter - 1,000,000 rows of order records via the

--ordersparameter

tiup demo bookshop prepare --users=200000 --books=500000 --authors=100000 --ratings=1000000 --orders=1000000 --drop-tables

You can delete the original table structure through the --drop-tables parameter. For more parameter descriptions, run the tiup demo bookshop --help command.

Method 2: Via TiDB Cloud Import

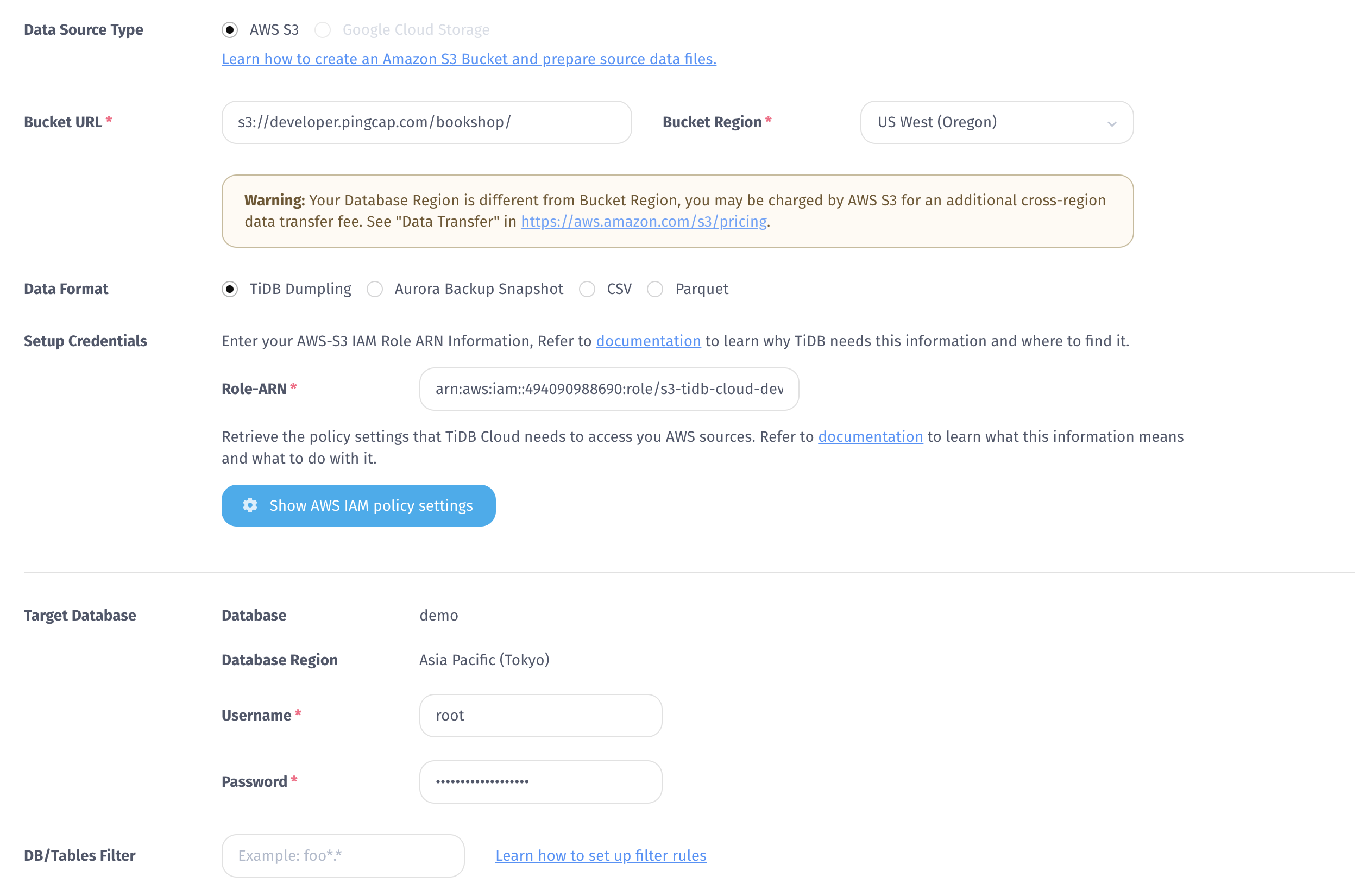

On the database details page of TiDB Cloud, click the Import button to enter the Data Import Task page. On this page, perform the following steps to import the Bookshop sample data from AWS S3 to TiDB Cloud.

Copy the following Bucket URL and Role-ARN to the corresponding input boxes:

Bucket URL:

s3://developer.pingcap.com/bookshop/Role-ARN:

arn:aws:iam::494090988690:role/s3-tidb-cloud-developer-accessIn this example, the following data is generated in advance:

- 200,000 rows of user information

- 500,000 rows of book information

- 100,000 rows of author information

- 1,000,000 rows of rating records

- 1,000,000 rows of order records

Select US West (Oregon) for Bucket Region.

Select TiDB Dumpling for Data Format.

Enter database login information.

Click the Import button to confirm the import.

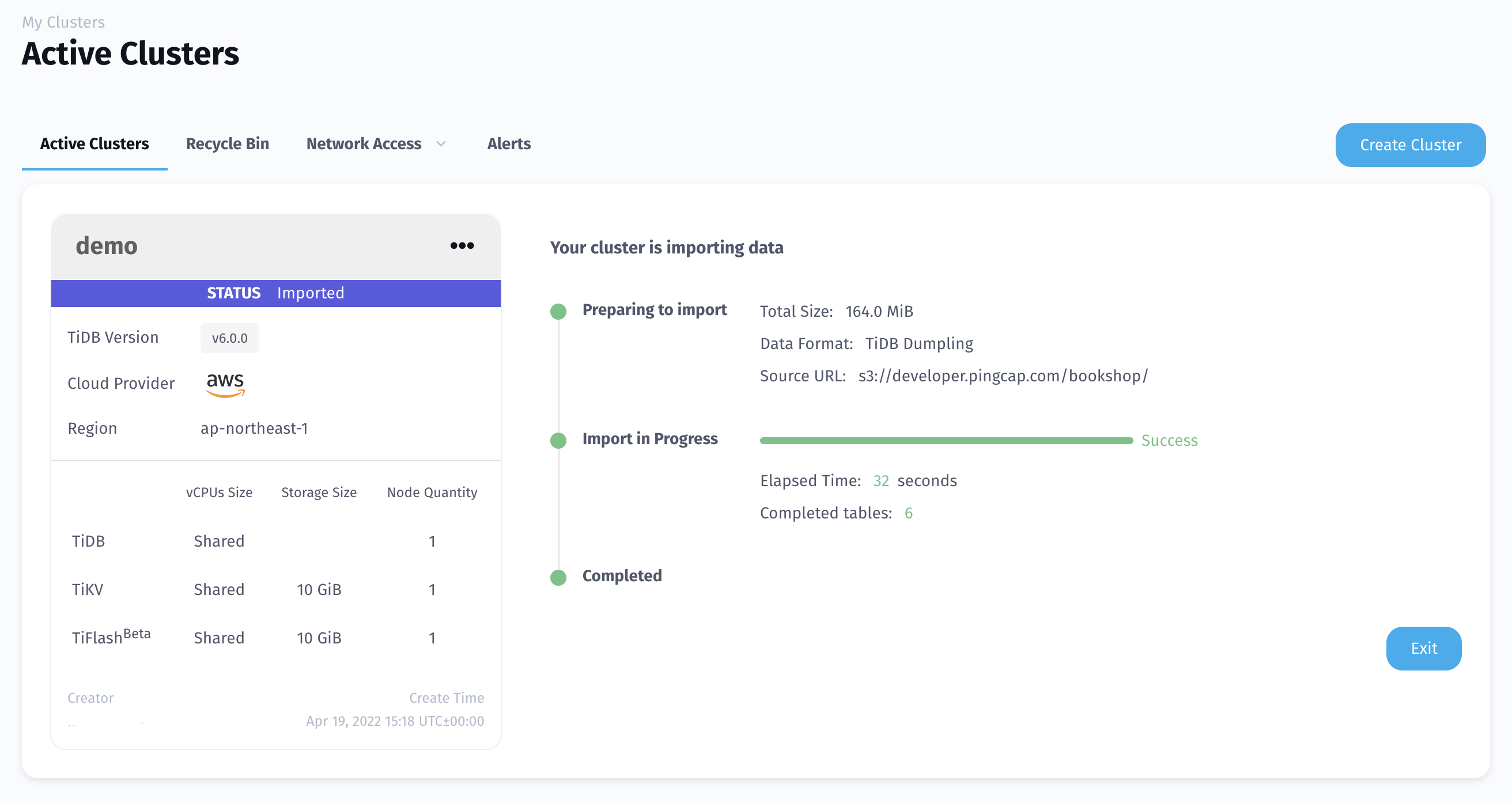

Wait for TiDB Cloud to complete the import.

If the following error message appears during the import process, run the

DROP DATABASE bookshop;command to clear the previously created sample database and then import data again.table(s) [

bookshop.authors,bookshop.book_authors,bookshop.books,bookshop.orders,bookshop.ratings,bookshop.users] are not empty.

For more information about TiDB Cloud, see TiDB Cloud Documentation.

View data import status

After the import is completed, you can view the data volume information of each table by executing the following SQL statement:

SELECT

CONCAT(table_schema,'.',table_name) AS 'Table Name',

table_rows AS 'Number of Rows',

CONCAT(ROUND(data_length/(1024*1024*1024),4),'G') AS 'Data Size',

CONCAT(ROUND(index_length/(1024*1024*1024),4),'G') AS 'Index Size',

CONCAT(ROUND((data_length+index_length)/(1024*1024*1024),4),'G') AS 'Total'

FROM

information_schema.TABLES

WHERE table_schema LIKE 'bookshop';

The result is as follows:

+-----------------------+----------------+-----------+------------+---------+

| Table Name | Number of Rows | Data Size | Index Size | Total |

+-----------------------+----------------+-----------+------------+---------+

| bookshop.orders | 1000000 | 0.0373G | 0.0075G | 0.0447G |

| bookshop.book_authors | 1000000 | 0.0149G | 0.0149G | 0.0298G |

| bookshop.ratings | 4000000 | 0.1192G | 0.1192G | 0.2384G |

| bookshop.authors | 100000 | 0.0043G | 0.0000G | 0.0043G |

| bookshop.users | 195348 | 0.0048G | 0.0021G | 0.0069G |

| bookshop.books | 1000000 | 0.0546G | 0.0000G | 0.0546G |

+-----------------------+----------------+-----------+------------+---------+

6 rows in set (0.03 sec)

Description of the tables

This section describes the database tables of the Bookshop application in detail.

books table

This table stores the basic information of books.

| Field name | Type | Description |

|---|---|---|

| id | bigint(20) | Unique ID of a book |

| title | varchar(100) | Title of a book |

| type | enum | Type of a book (for example, magazine, animation, or teaching aids) |

| stock | bigint(20) | Stock |

| price | decimal(15,2) | Price |

| published_at | datetime | Date of publish |

authors table

This table stores basic information of authors.

| Field name | Type | Description |

|---|---|---|

| id | bigint(20) | Unique ID of an author |

| name | varchar(100) | Name of an author |

| gender | tinyint(1) | Biological gender (0: female, 1: male, NULL: unknown) |

| birth_year | smallint(6) | Year of birth |

| death_year | smallint(6) | Year of death |

users table

This table stores information of Bookshop users.

| Field name | Type | Description |

|---|---|---|

| id | bigint(20) | Unique ID of a user |

| balance | decimal(15,2) | Balance |

| nickname | varchar(100) | Nickname |

ratings table

This table stores records of user ratings on books.

| Field name | Type | Description |

|---|---|---|

| book_id | bigint | Unique ID of a book (linked to books) |

| user_id | bigint | User's unique identifier (linked to users) |

| score | tinyint | User rating (1-5) |

| rated_at | datetime | Rating time |

book_authors table

An author may write multiple books, and a book may involve more than one author. This table stores the correspondence between books and authors.

| Field name | Type | Description |

|---|---|---|

| book_id | bigint(20) | Unique ID of a book (linked to books) |

| author_id | bigint(20) | Unique ID of an author(Link to authors) |

orders table

This table stores user purchase information.

| Field name | Type | Description |

|---|---|---|

| id | bigint(20) | Unique ID of an order |

| book_id | bigint(20) | Unique ID of a book (linked to books) |

| user_id | bigint(20) | User unique identifier (associated with users) |

| quantity | tinyint(4) | Purchase quantity |

| ordered_at | datetime | Purchase time |

Database initialization script dbinit.sql

If you want to manually create database table structures in the Bookshop application, run the following SQL statements:

CREATE DATABASE IF NOT EXISTS `bookshop`;

DROP TABLE IF EXISTS `bookshop`.`books`;

CREATE TABLE `bookshop`.`books` (

`id` bigint(20) AUTO_RANDOM NOT NULL,

`title` varchar(100) NOT NULL,

`type` enum('Magazine', 'Novel', 'Life', 'Arts', 'Comics', 'Education & Reference', 'Humanities & Social Sciences', 'Science & Technology', 'Kids', 'Sports') NOT NULL,

`published_at` datetime NOT NULL,

`stock` int(11) DEFAULT '0',

`price` decimal(15,2) DEFAULT '0.0',

PRIMARY KEY (`id`) CLUSTERED

) DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin;

DROP TABLE IF EXISTS `bookshop`.`authors`;

CREATE TABLE `bookshop`.`authors` (

`id` bigint(20) AUTO_RANDOM NOT NULL,

`name` varchar(100) NOT NULL,

`gender` tinyint(1) DEFAULT NULL,

`birth_year` smallint(6) DEFAULT NULL,

`death_year` smallint(6) DEFAULT NULL,

PRIMARY KEY (`id`) CLUSTERED

) DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin;

DROP TABLE IF EXISTS `bookshop`.`book_authors`;

CREATE TABLE `bookshop`.`book_authors` (

`book_id` bigint(20) NOT NULL,

`author_id` bigint(20) NOT NULL,

PRIMARY KEY (`book_id`,`author_id`) CLUSTERED

) DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin;

DROP TABLE IF EXISTS `bookshop`.`ratings`;

CREATE TABLE `bookshop`.`ratings` (

`book_id` bigint NOT NULL,

`user_id` bigint NOT NULL,

`score` tinyint NOT NULL,

`rated_at` datetime NOT NULL DEFAULT NOW() ON UPDATE NOW(),

PRIMARY KEY (`book_id`,`user_id`) CLUSTERED,

UNIQUE KEY `uniq_book_user_idx` (`book_id`,`user_id`)

) DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin;

ALTER TABLE `bookshop`.`ratings` SET TIFLASH REPLICA 1;

DROP TABLE IF EXISTS `bookshop`.`users`;

CREATE TABLE `bookshop`.`users` (

`id` bigint AUTO_RANDOM NOT NULL,

`balance` decimal(15,2) DEFAULT '0.0',

`nickname` varchar(100) UNIQUE NOT NULL,

PRIMARY KEY (`id`)

) DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin;

DROP TABLE IF EXISTS `bookshop`.`orders`;

CREATE TABLE `bookshop`.`orders` (

`id` bigint(20) AUTO_RANDOM NOT NULL,

`book_id` bigint(20) NOT NULL,

`user_id` bigint(20) NOT NULL,

`quality` tinyint(4) NOT NULL,

`ordered_at` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`) CLUSTERED,

KEY `orders_book_id_idx` (`book_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin