- Docs Home

- About TiDB

- Quick Start

- Develop

- Overview

- Quick Start

- Build a TiDB Cluster in TiDB Cloud (Developer Tier)

- CRUD SQL in TiDB

- Build a Simple CRUD App with TiDB

- Example Applications

- Connect to TiDB

- Design Database Schema

- Write Data

- Read Data

- Transaction

- Optimize

- Troubleshoot

- Reference

- Cloud Native Development Environment

- Third-party Support

- Deploy

- Software and Hardware Requirements

- Environment Configuration Checklist

- Plan Cluster Topology

- Install and Start

- Verify Cluster Status

- Test Cluster Performance

- Migrate

- Overview

- Migration Tools

- Migration Scenarios

- Migrate from Aurora

- Migrate MySQL of Small Datasets

- Migrate MySQL of Large Datasets

- Migrate and Merge MySQL Shards of Small Datasets

- Migrate and Merge MySQL Shards of Large Datasets

- Migrate from CSV Files

- Migrate from SQL Files

- Migrate from One TiDB Cluster to Another TiDB Cluster

- Migrate from TiDB to MySQL-compatible Databases

- Advanced Migration

- Integrate

- Maintain

- Monitor and Alert

- Troubleshoot

- TiDB Troubleshooting Map

- Identify Slow Queries

- Analyze Slow Queries

- SQL Diagnostics

- Identify Expensive Queries Using Top SQL

- Identify Expensive Queries Using Logs

- Statement Summary Tables

- Troubleshoot Hotspot Issues

- Troubleshoot Increased Read and Write Latency

- Save and Restore the On-Site Information of a Cluster

- Troubleshoot Cluster Setup

- Troubleshoot High Disk I/O Usage

- Troubleshoot Lock Conflicts

- Troubleshoot TiFlash

- Troubleshoot Write Conflicts in Optimistic Transactions

- Troubleshoot Inconsistency Between Data and Indexes

- Performance Tuning

- Tuning Guide

- Configuration Tuning

- System Tuning

- Software Tuning

- SQL Tuning

- Overview

- Understanding the Query Execution Plan

- SQL Optimization Process

- Overview

- Logic Optimization

- Physical Optimization

- Prepare Execution Plan Cache

- Control Execution Plans

- Tutorials

- TiDB Tools

- Overview

- Use Cases

- Download

- TiUP

- Documentation Map

- Overview

- Terminology and Concepts

- Manage TiUP Components

- FAQ

- Troubleshooting Guide

- Command Reference

- Overview

- TiUP Commands

- TiUP Cluster Commands

- Overview

- tiup cluster audit

- tiup cluster check

- tiup cluster clean

- tiup cluster deploy

- tiup cluster destroy

- tiup cluster disable

- tiup cluster display

- tiup cluster edit-config

- tiup cluster enable

- tiup cluster help

- tiup cluster import

- tiup cluster list

- tiup cluster patch

- tiup cluster prune

- tiup cluster reload

- tiup cluster rename

- tiup cluster replay

- tiup cluster restart

- tiup cluster scale-in

- tiup cluster scale-out

- tiup cluster start

- tiup cluster stop

- tiup cluster template

- tiup cluster upgrade

- TiUP DM Commands

- Overview

- tiup dm audit

- tiup dm deploy

- tiup dm destroy

- tiup dm disable

- tiup dm display

- tiup dm edit-config

- tiup dm enable

- tiup dm help

- tiup dm import

- tiup dm list

- tiup dm patch

- tiup dm prune

- tiup dm reload

- tiup dm replay

- tiup dm restart

- tiup dm scale-in

- tiup dm scale-out

- tiup dm start

- tiup dm stop

- tiup dm template

- tiup dm upgrade

- TiDB Cluster Topology Reference

- DM Cluster Topology Reference

- Mirror Reference Guide

- TiUP Components

- PingCAP Clinic Diagnostic Service

- TiDB Operator

- Dumpling

- TiDB Lightning

- TiDB Data Migration

- About TiDB Data Migration

- Architecture

- Quick Start

- Deploy a DM cluster

- Tutorials

- Advanced Tutorials

- Maintain

- Cluster Upgrade

- Tools

- Performance Tuning

- Manage Data Sources

- Manage Tasks

- Export and Import Data Sources and Task Configurations of Clusters

- Handle Alerts

- Daily Check

- Reference

- Architecture

- Command Line

- Configuration Files

- OpenAPI

- Compatibility Catalog

- Secure

- Monitoring and Alerts

- Error Codes

- Glossary

- Example

- Troubleshoot

- Release Notes

- Backup & Restore (BR)

- TiDB Binlog

- TiCDC

- Dumpling

- sync-diff-inspector

- TiSpark

- Reference

- Cluster Architecture

- Key Monitoring Metrics

- Secure

- Privileges

- SQL

- SQL Language Structure and Syntax

- SQL Statements

ADD COLUMNADD INDEXADMINADMIN CANCEL DDLADMIN CHECKSUM TABLEADMIN CHECK [TABLE|INDEX]ADMIN SHOW DDL [JOBS|QUERIES]ADMIN SHOW TELEMETRYALTER DATABASEALTER INDEXALTER INSTANCEALTER PLACEMENT POLICYALTER TABLEALTER TABLE COMPACTALTER USERANALYZE TABLEBACKUPBATCHBEGINCHANGE COLUMNCOMMITCHANGE DRAINERCHANGE PUMPCREATE [GLOBAL|SESSION] BINDINGCREATE DATABASECREATE INDEXCREATE PLACEMENT POLICYCREATE ROLECREATE SEQUENCECREATE TABLE LIKECREATE TABLECREATE USERCREATE VIEWDEALLOCATEDELETEDESCDESCRIBEDODROP [GLOBAL|SESSION] BINDINGDROP COLUMNDROP DATABASEDROP INDEXDROP PLACEMENT POLICYDROP ROLEDROP SEQUENCEDROP STATSDROP TABLEDROP USERDROP VIEWEXECUTEEXPLAIN ANALYZEEXPLAINFLASHBACK TABLEFLUSH PRIVILEGESFLUSH STATUSFLUSH TABLESGRANT <privileges>GRANT <role>INSERTKILL [TIDB]LOAD DATALOAD STATSMODIFY COLUMNPREPARERECOVER TABLERENAME INDEXRENAME TABLEREPLACERESTOREREVOKE <privileges>REVOKE <role>ROLLBACKSELECTSET DEFAULT ROLESET [NAMES|CHARACTER SET]SET PASSWORDSET ROLESET TRANSACTIONSET [GLOBAL|SESSION] <variable>SHOW ANALYZE STATUSSHOW [BACKUPS|RESTORES]SHOW [GLOBAL|SESSION] BINDINGSSHOW BUILTINSSHOW CHARACTER SETSHOW COLLATIONSHOW [FULL] COLUMNS FROMSHOW CONFIGSHOW CREATE PLACEMENT POLICYSHOW CREATE SEQUENCESHOW CREATE TABLESHOW CREATE USERSHOW DATABASESSHOW DRAINER STATUSSHOW ENGINESSHOW ERRORSSHOW [FULL] FIELDS FROMSHOW GRANTSSHOW INDEX [FROM|IN]SHOW INDEXES [FROM|IN]SHOW KEYS [FROM|IN]SHOW MASTER STATUSSHOW PLACEMENTSHOW PLACEMENT FORSHOW PLACEMENT LABELSSHOW PLUGINSSHOW PRIVILEGESSHOW [FULL] PROCESSSLISTSHOW PROFILESSHOW PUMP STATUSSHOW SCHEMASSHOW STATS_HEALTHYSHOW STATS_HISTOGRAMSSHOW STATS_METASHOW STATUSSHOW TABLE NEXT_ROW_IDSHOW TABLE REGIONSSHOW TABLE STATUSSHOW [FULL] TABLESSHOW [GLOBAL|SESSION] VARIABLESSHOW WARNINGSSHUTDOWNSPLIT REGIONSTART TRANSACTIONTABLETRACETRUNCATEUPDATEUSEWITH

- Data Types

- Functions and Operators

- Overview

- Type Conversion in Expression Evaluation

- Operators

- Control Flow Functions

- String Functions

- Numeric Functions and Operators

- Date and Time Functions

- Bit Functions and Operators

- Cast Functions and Operators

- Encryption and Compression Functions

- Locking Functions

- Information Functions

- JSON Functions

- Aggregate (GROUP BY) Functions

- Window Functions

- Miscellaneous Functions

- Precision Math

- Set Operations

- List of Expressions for Pushdown

- TiDB Specific Functions

- Clustered Indexes

- Constraints

- Generated Columns

- SQL Mode

- Table Attributes

- Transactions

- Garbage Collection (GC)

- Views

- Partitioning

- Temporary Tables

- Cached Tables

- Character Set and Collation

- Placement Rules in SQL

- System Tables

mysql- INFORMATION_SCHEMA

- Overview

ANALYZE_STATUSCLIENT_ERRORS_SUMMARY_BY_HOSTCLIENT_ERRORS_SUMMARY_BY_USERCLIENT_ERRORS_SUMMARY_GLOBALCHARACTER_SETSCLUSTER_CONFIGCLUSTER_HARDWARECLUSTER_INFOCLUSTER_LOADCLUSTER_LOGCLUSTER_SYSTEMINFOCOLLATIONSCOLLATION_CHARACTER_SET_APPLICABILITYCOLUMNSDATA_LOCK_WAITSDDL_JOBSDEADLOCKSENGINESINSPECTION_RESULTINSPECTION_RULESINSPECTION_SUMMARYKEY_COLUMN_USAGEMETRICS_SUMMARYMETRICS_TABLESPARTITIONSPLACEMENT_POLICIESPROCESSLISTREFERENTIAL_CONSTRAINTSSCHEMATASEQUENCESSESSION_VARIABLESSLOW_QUERYSTATISTICSTABLESTABLE_CONSTRAINTSTABLE_STORAGE_STATSTIDB_HOT_REGIONSTIDB_HOT_REGIONS_HISTORYTIDB_INDEXESTIDB_SERVERS_INFOTIDB_TRXTIFLASH_REPLICATIKV_REGION_PEERSTIKV_REGION_STATUSTIKV_STORE_STATUSUSER_PRIVILEGESVIEWS

METRICS_SCHEMA

- UI

- TiDB Dashboard

- Overview

- Maintain

- Access

- Overview Page

- Cluster Info Page

- Top SQL Page

- Key Visualizer Page

- Metrics Relation Graph

- SQL Statements Analysis

- Slow Queries Page

- Cluster Diagnostics

- Search Logs Page

- Instance Profiling

- Session Management and Configuration

- FAQ

- CLI

- Command Line Flags

- Configuration File Parameters

- System Variables

- Storage Engines

- Telemetry

- Errors Codes

- Table Filter

- Schedule Replicas by Topology Labels

- FAQs

- Release Notes

- All Releases

- Release Timeline

- TiDB Versioning

- v6.1

- v6.0

- v5.4

- v5.3

- v5.2

- v5.1

- v5.0

- v4.0

- v3.1

- v3.0

- v2.1

- v2.0

- v1.0

- Glossary

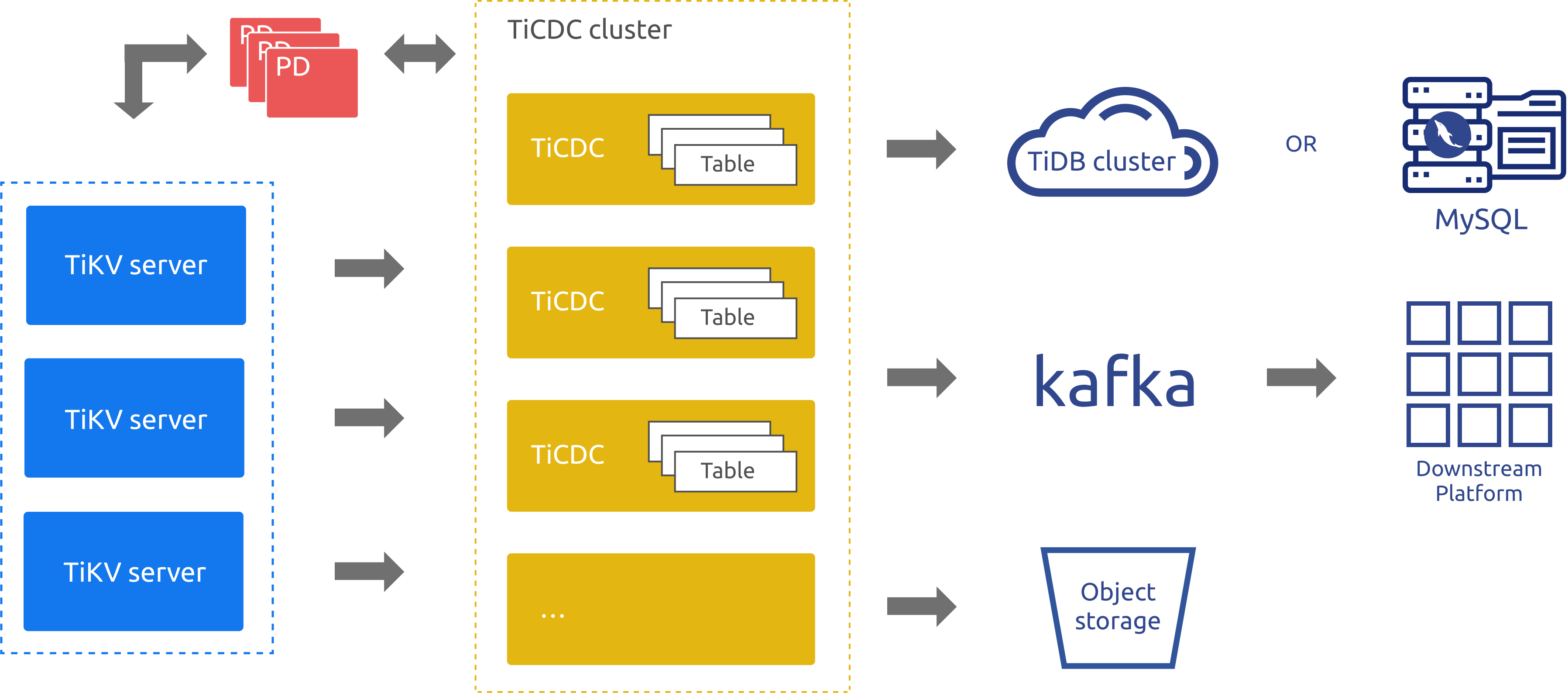

TiCDC Overview

TiCDC is a tool used for replicating incremental data of TiDB. Specifically, TiCDC pulls TiKV change logs, sorts captured data, and exports row-based incremental data to downstream databases.

Usage scenarios

- Database disaster recovery: TiCDC can be used for disaster recovery between homogeneous databases to ensure eventual data consistency of primary and secondary databases after a disaster event. This function works only with TiDB primary and secondary clusters.

- Data integration: TiCDC provides TiCDC Canal-JSON Protocol, which allows other systems to subscribe data changes from TiCDC. In this way, TiCDC provides data sources for various scenarios such as monitoring, caching, global indexing, data analysis, and primary-secondary replication between heterogeneous databases.

TiCDC architecture

When TiCDC is running, it is a stateless node that achieves high availability through etcd in PD. The TiCDC cluster supports creating multiple replication tasks to replicate data to multiple different downstream platforms.

The architecture of TiCDC is shown in the following figure:

System roles

TiKV CDC component: Only outputs key-value (KV) change logs.

- Assembles KV change logs in the internal logic.

- Provides the interface to output KV change logs. The data sent includes real-time change logs and incremental scan change logs.

capture: The operating process of TiCDC. Multiplecapturesform a TiCDC cluster that replicates KV change logs.- Each

capturepulls a part of KV change logs. - Sorts the pulled KV change log(s).

- Restores the transaction to downstream or outputs the log based on the TiCDC open protocol.

- Each

Replication features

This section introduces the replication features of TiCDC.

Sink support

Currently, the TiCDC sink component supports replicating data to the following downstream platforms:

- Databases compatible with MySQL protocol. The sink component provides the final consistency support.

- Kafka based on the TiCDC Open Protocol. The sink component ensures the row-level order, final consistency or strict transactional consistency.

Ensure replication order and consistency

Replication order

For all DDL or DML statements, TiCDC outputs them at least once.

When the TiKV or TiCDC cluster encounters failure, TiCDC might send the same DDL/DML statement repeatedly. For duplicated DDL/DML statements:

- MySQL sink can execute DDL statements repeatedly. For DDL statements that can be executed repeatedly in the downstream, such as

truncate table, the statement is executed successfully. For those that cannot be executed repeatedly, such ascreate table, the execution fails, and TiCDC ignores the error and continues the replication. - Kafka sink sends messages repeatedly, but the duplicate messages do not affect the constraints of

Resolved Ts. Users can filter the duplicated messages from Kafka consumers.

- MySQL sink can execute DDL statements repeatedly. For DDL statements that can be executed repeatedly in the downstream, such as

Replication consistency

MySQL sink

- TiCDC does not split single-table transactions and ensures the atomicity of single-table transactions.

- TiCDC does not ensure that the execution order of downstream transactions is the same as that of upstream transactions.

- TiCDC splits cross-table transactions in the unit of table and does not ensure the atomicity of cross-table transactions.

- TiCDC ensures that the order of single-row updates is consistent with that in the upstream.

Kafka sink

- TiCDC provides different strategies for data distribution. You can distribute data to different Kafka partitions based on the table, primary key, or timestamp.

- For different distribution strategies, the different consumer implementations can achieve different levels of consistency, including row-level consistency, eventual consistency, or cross-table transactional consistency.

- TiCDC does not have an implementation of Kafka consumers, but only provides TiCDC Open Protocol. You can implement the Kafka consumer according to this protocol.

Restrictions

There are some restrictions when using TiCDC.

Requirements for valid index

TiCDC only replicates the table that has at least one valid index. A valid index is defined as follows:

- The primary key (

PRIMARY KEY) is a valid index. - The unique index (

UNIQUE INDEX) that meets the following conditions at the same time is a valid index:- Every column of the index is explicitly defined as non-nullable (

NOT NULL). - The index does not have the virtual generated column (

VIRTUAL GENERATED COLUMNS).

- Every column of the index is explicitly defined as non-nullable (

Since v4.0.8, TiCDC supports replicating tables without a valid index by modifying the task configuration. However, this compromises the guarantee of data consistency to some extent. For more details, see Replicate tables without a valid index.

Unsupported scenarios

Currently, the following scenarios are not supported:

- The TiKV cluster that uses RawKV alone.

- The DDL operation

CREATE SEQUENCEand the SEQUENCE function in TiDB. When the upstream TiDB usesSEQUENCE, TiCDC ignoresSEQUENCEDDL operations/functions performed upstream. However, DML operations usingSEQUENCEfunctions can be correctly replicated.

TiCDC only provides partial support for scenarios of large transactions in the upstream. For details, refer to FAQ: Does TiCDC support replicating large transactions? Is there any risk?.

Since v5.3.0, TiCDC no longer supports the cyclic replication feature.

Install and deploy TiCDC

You can either deploy TiCDC along with a new TiDB cluster or add the TiCDC component to an existing TiDB cluster. For details, see Deploy TiCDC.

Manage TiCDC Cluster and Replication Tasks

Currently, you can use the cdc cli tool to manage the status of a TiCDC cluster and data replication tasks. For details, see:

- Use

cdc clito manage cluster status and data replication task - Use OpenAPI to manage cluster status and data replication task

TiCDC Open Protocol

TiCDC Open Protocol is a row-level data change notification protocol that provides data sources for monitoring, caching, full-text indexing, analysis engines, and primary-secondary replication between different databases. TiCDC complies with TiCDC Open Protocol and replicates data changes of TiDB to third-party data medium such as MQ (Message Queue). For more information, see TiCDC Open Protocol.

Compatibility notes

Incompatibility issue caused by using the TiCDC v5.0.0-rc cdc cli tool to operate a v4.0.x cluster

When using the cdc cli tool of TiCDC v5.0.0-rc to operate a v4.0.x TiCDC cluster, you might encounter the following abnormal situations:

If the TiCDC cluster is v4.0.8 or an earlier version, using the v5.0.0-rc

cdc clitool to create a replication task might cause cluster anomalies and get the replication task stuck.If the TiCDC cluster is v4.0.9 or a later version, using the v5.0.0-rc

cdc clitool to create a replication task will cause the old value and unified sorter features to be unexpectedly enabled by default.

Solutions: Use the cdc executable file corresponding to the TiCDC cluster version to perform the following operations:

- Delete the changefeed created using the v5.0.0-rc

cdc clitool. For example, run thetiup cdc:v4.0.9 cli changefeed remove -c xxxx --pd=xxxxx --forcecommand. - If the replication task is stuck, restart the TiCDC cluster. For example, run the

tiup cluster restart <cluster_name> -R cdccommand. - Re-create the changefeed. For example, run the

tiup cdc:v4.0.9 cli changefeed create --sink-uri=xxxx --pd=xxxcommand.

The above issue exists only when cdc cli is v5.0.0-rc. Other v5.0.x cdc cli tool can be compatible with v4.0.x clusters.

Compatibility notes for sort-dir and data-dir

The sort-dir configuration is used to specify the temporary file directory for the TiCDC sorter. Its functionalities might vary in different versions. The following table lists sort-dir's compatibility changes across versions.

| Version | sort-engine functionality | Note | Recommendation |

|---|---|---|---|

| v4.0.11 or an earlier v4.0 version, v5.0.0-rc | It is a changefeed configuration item and specifies temporary file directory for the file sorter and unified sorter. | In these versions, file sorter and unified sorter are experimental features and NOT recommended for the production environment. If multiple changefeeds use the unified sorter as its sort-engine, the actual temporary file directory might be the sort-dir configuration of any changefeed, and the directory used for each TiCDC node might be different. | It is not recommended to use unified sorter in the production environment. |

| v4.0.12, v4.0.13, v5.0.0, and v5.0.1 | It is a configuration item of changefeed or of cdc server. | By default, the sort-dir configuration of a changefeed does not take effect, and the sort-dir configuration of cdc server defaults to /tmp/cdc_sort. It is recommended to only configure cdc server in the production environment.If you use TiUP to deploy TiCDC, it is recommended to use the latest TiUP version and set sorter.sort-dir in the TiCDC server configuration.The unified sorter is enabled by default in v4.0.13, v5.0.0, and v5.0.1. If you want to upgrade your cluster to these versions, make sure that you have correctly configured sorter.sort-dir in the TiCDC server configuration. | You need to configure sort-dir using the cdc server command-line parameter (or TiUP). |

| v4.0.14 and later v4.0 versions, v5.0.3 and later v5.0 versions, later TiDB versions | sort-dir is deprecated. It is recommended to configure data-dir. | You can configure data-dir using the latest version of TiUP. In these TiDB versions, unified sorter is enabled by default. Make sure that data-dir has been configured correctly when you upgrade your cluster. Otherwise, /tmp/cdc_data will be used by default as the temporary file directory. If the storage capacity of the device where the directory is located is insufficient, the problem of insufficient hard disk space might occur. In this situation, the previous sort-dir configuration of changefeed will become invalid. | You need to configure data-dir using the cdc server command-line parameter (or TiUP). |

Compatibility with temporary tables

Since v5.3.0, TiCDC supports global temporary tables. Replicating global temporary tables to the downstream using TiCDC of a version earlier than v5.3.0 causes table definition error.

If the upstream cluster contains a global temporary table, the downstream TiDB cluster is expected to be v5.3.0 or a later version. Otherwise, an error occurs during the replication process.

TiCDC FAQs and troubleshooting

- To learn the FAQs of TiCDC, see TiCDC FAQs.

- To learn how to troubleshoot TiCDC, see Troubleshoot TiCDC.