- Docs Home

- About TiDB Cloud

- Get Started

- Develop Applications

- Overview

- Quick Start

- Build a TiDB Developer Cluster

- CRUD SQL in TiDB

- Build a Simple CRUD App with TiDB

- Example Applications

- Connect to TiDB

- Design Database Schema

- Write Data

- Read Data

- Transaction

- Optimize

- Troubleshoot

- Reference

- Cloud Native Development Environment

- Manage Cluster

- Plan Your Cluster

- Create a TiDB Cluster

- Connect to Your TiDB Cluster

- Set Up VPC Peering Connections

- Use an HTAP Cluster with TiFlash

- Scale a TiDB Cluster

- Upgrade a TiDB Cluster

- Delete a TiDB Cluster

- Use TiDB Cloud API (Beta)

- Migrate Data

- Import Sample Data

- Migrate Data into TiDB

- Configure Amazon S3 Access and GCS Access

- Migrate from MySQL-Compatible Databases

- Migrate Incremental Data from MySQL-Compatible Databases

- Migrate from Amazon Aurora MySQL in Bulk

- Import or Migrate from Amazon S3 or GCS to TiDB Cloud

- Import CSV Files from Amazon S3 or GCS into TiDB Cloud

- Import Apache Parquet Files from Amazon S3 or GCS into TiDB Cloud

- Troubleshoot Access Denied Errors during Data Import from Amazon S3

- Export Data from TiDB

- Back Up and Restore

- Monitor and Alert

- Overview

- Built-in Monitoring

- Built-in Alerting

- Third-Party Monitoring Integrations

- Tune Performance

- Overview

- Analyze Performance

- SQL Tuning

- Overview

- Understanding the Query Execution Plan

- SQL Optimization Process

- Overview

- Logic Optimization

- Physical Optimization

- Prepare Execution Plan Cache

- Control Execution Plans

- TiKV Follower Read

- Coprocessor Cache

- Garbage Collection (GC)

- Tune TiFlash performance

- Manage User Access

- Billing

- Reference

- TiDB Cluster Architecture

- TiDB Cloud Cluster Limits and Quotas

- TiDB Limitations

- SQL

- Explore SQL with TiDB

- SQL Language Structure and Syntax

- SQL Statements

ADD COLUMNADD INDEXADMINADMIN CANCEL DDLADMIN CHECKSUM TABLEADMIN CHECK [TABLE|INDEX]ADMIN SHOW DDL [JOBS|QUERIES]ALTER DATABASEALTER INDEXALTER TABLEALTER TABLE COMPACTALTER USERANALYZE TABLEBATCHBEGINCHANGE COLUMNCOMMITCHANGE DRAINERCHANGE PUMPCREATE [GLOBAL|SESSION] BINDINGCREATE DATABASECREATE INDEXCREATE ROLECREATE SEQUENCECREATE TABLE LIKECREATE TABLECREATE USERCREATE VIEWDEALLOCATEDELETEDESCDESCRIBEDODROP [GLOBAL|SESSION] BINDINGDROP COLUMNDROP DATABASEDROP INDEXDROP ROLEDROP SEQUENCEDROP STATSDROP TABLEDROP USERDROP VIEWEXECUTEEXPLAIN ANALYZEEXPLAINFLASHBACK TABLEFLUSH PRIVILEGESFLUSH STATUSFLUSH TABLESGRANT <privileges>GRANT <role>INSERTKILL [TIDB]MODIFY COLUMNPREPARERECOVER TABLERENAME INDEXRENAME TABLEREPLACEREVOKE <privileges>REVOKE <role>ROLLBACKSELECTSET DEFAULT ROLESET [NAMES|CHARACTER SET]SET PASSWORDSET ROLESET TRANSACTIONSET [GLOBAL|SESSION] <variable>SHOW ANALYZE STATUSSHOW [GLOBAL|SESSION] BINDINGSSHOW BUILTINSSHOW CHARACTER SETSHOW COLLATIONSHOW [FULL] COLUMNS FROMSHOW CREATE SEQUENCESHOW CREATE TABLESHOW CREATE USERSHOW DATABASESSHOW DRAINER STATUSSHOW ENGINESSHOW ERRORSSHOW [FULL] FIELDS FROMSHOW GRANTSSHOW INDEX [FROM|IN]SHOW INDEXES [FROM|IN]SHOW KEYS [FROM|IN]SHOW MASTER STATUSSHOW PLUGINSSHOW PRIVILEGESSHOW [FULL] PROCESSSLISTSHOW PROFILESSHOW PUMP STATUSSHOW SCHEMASSHOW STATS_HEALTHYSHOW STATS_HISTOGRAMSSHOW STATS_METASHOW STATUSSHOW TABLE NEXT_ROW_IDSHOW TABLE REGIONSSHOW TABLE STATUSSHOW [FULL] TABLESSHOW [GLOBAL|SESSION] VARIABLESSHOW WARNINGSSHUTDOWNSPLIT REGIONSTART TRANSACTIONTABLETRACETRUNCATEUPDATEUSEWITH

- Data Types

- Functions and Operators

- Overview

- Type Conversion in Expression Evaluation

- Operators

- Control Flow Functions

- String Functions

- Numeric Functions and Operators

- Date and Time Functions

- Bit Functions and Operators

- Cast Functions and Operators

- Encryption and Compression Functions

- Locking Functions

- Information Functions

- JSON Functions

- Aggregate (GROUP BY) Functions

- Window Functions

- Miscellaneous Functions

- Precision Math

- Set Operations

- List of Expressions for Pushdown

- TiDB Specific Functions

- Clustered Indexes

- Constraints

- Generated Columns

- SQL Mode

- Table Attributes

- Transactions

- Views

- Partitioning

- Temporary Tables

- Cached Tables

- Character Set and Collation

- Read Historical Data

- System Tables

mysql- INFORMATION_SCHEMA

- Overview

ANALYZE_STATUSCLIENT_ERRORS_SUMMARY_BY_HOSTCLIENT_ERRORS_SUMMARY_BY_USERCLIENT_ERRORS_SUMMARY_GLOBALCHARACTER_SETSCLUSTER_INFOCOLLATIONSCOLLATION_CHARACTER_SET_APPLICABILITYCOLUMNSDATA_LOCK_WAITSDDL_JOBSDEADLOCKSENGINESKEY_COLUMN_USAGEPARTITIONSPROCESSLISTREFERENTIAL_CONSTRAINTSSCHEMATASEQUENCESSESSION_VARIABLESSLOW_QUERYSTATISTICSTABLESTABLE_CONSTRAINTSTABLE_STORAGE_STATSTIDB_HOT_REGIONS_HISTORYTIDB_INDEXESTIDB_SERVERS_INFOTIDB_TRXTIFLASH_REPLICATIKV_REGION_PEERSTIKV_REGION_STATUSTIKV_STORE_STATUSUSER_PRIVILEGESVIEWS

- System Variables

- API Reference

- Storage Engines

- Dumpling

- Table Filter

- Troubleshoot Inconsistency Between Data and Indexes

- FAQs

- Release Notes

- Support

- Glossary

Perform a Proof of Concept (PoC) with TiDB Cloud

TiDB Cloud is a Database-as-a-Service (DBaaS) product that delivers everything great about TiDB in a fully managed cloud database. It helps you focus on your applications, instead of the complexities of your database. TiDB Cloud is currently available on both Amazon Web Services (AWS) and Google Cloud Platform (GCP).

Initiating a proof of concept (PoC) is the best way to determine whether TiDB Cloud is the best fit for your business needs. It will also get you familiar with the key features of TiDB Cloud in a short time. By running performance tests, you can see whether your workload can run efficiently on TiDB Cloud. You can also evaluate the efforts required to migrate your data and adapt configurations.

This document describes the typical PoC procedures and aims to help you quickly complete a TiDB Cloud PoC. It is a best practice that has been validated by TiDB experts and a large customer base.

If you are interested in doing a PoC, feel free to contact PingCAP before you get started. The support team can help you create a test plan and walk you through the PoC procedures smoothly.

Alternatively, you can create a Developer Tier (one-year free trial) to get familiar with TiDB Cloud for a quick evaluation. Note that the Developer Tier has some special terms and conditions.

Overview of the PoC procedures

The purpose of a PoC is to test whether TiDB Cloud meets your business requirements. A typical PoC usually lasts 14 days, during which you are expected to focus on completing the PoC.

A typical TiDB Cloud PoC consists of the following steps:

- Define success criteria and create a test plan

- Identify characteristics of your workload

- Sign up and create a dedicated cluster for the PoC

- Adapt your schemas and SQL

- Import data

- Run your workload and evaluate results

- Explore more features

- Clean up the environment and finish the PoC

Step 1. Define success criteria and create a test plan

When evaluating TiDB Cloud through a PoC, it is recommended to decide your points of interest and the corresponding technical evaluation criteria based on your business needs, and then clarify your expectations and goals for the PoC. Clear and measurable technical criteria with a detailed test plan can help you focus on the key aspects, cover the business level requirements, and ultimately get answers through the PoC procedures.

Use the following questions to help identify the goals of your PoC:

- What is the scenario of your workload?

- What is the dataset size or workload of your business? What is the growth rate?

- What are the performance requirements, including the business-critical throughput or latency requirements?

- What are the availability and stability requirements, including the minimum acceptable planned or unplanned downtime?

- What are the necessary metrics for operational efficiency? How do you measure them?

- What are the security and compliance requirements for your workload?

For more information about the success criteria and how to create a test plan, feel free to contact PingCAP.

Step 2. Identify characteristics of your workload

TiDB Cloud is suitable for various use cases that require high availability and strong consistency with a large volume of data. TiDB Introduction lists the key features and scenarios. You can check whether they apply to your business scenarios:

- Horizontally scaling out or scaling in

- Financial-grade high availability

- Real-time HTAP

- Compatible with the MySQL 5.7 protocol and MySQL ecosystem

You might also be interested in using TiFlash, a columnar storage engine that helps speed up analytical processing. During the PoC, you can use the TiFlash feature at any time.

Step 3. Sign up and create a dedicated cluster for the PoC

To create a dedicated cluster for the PoC, take the following steps:

Fill in the PoC application form by doing one of the following:

- If you have already created a Developer Tier (one-year free trial), an prompt bar about submitting your PoC application is displayed in the TiDB Cloud console. You can click the PoC application link in the bar to fill in the PoC application form.

- If you have not created a Developer Tier yet, go to the Apply for PoC page to fill in the PoC application form.

Once you submit the form, the TiDB Cloud support team will review your application, contact you, and transfer credits to your account once the application is approved. You can also contact a PingCAP support engineer to assist with your PoC procedures to ensure the PoC runs as smoothly as possible.

Refer to Quick Start to create a Dedicated Tier cluster for the PoC.

Capacity planning is recommended for cluster sizing before you create a cluster. You can start with estimated numbers of TiDB, TiKV, or TiFlash nodes, and scale out the cluster later to meet performance requirements. You can find more details in the following documents or consult our support team.

- For more information about estimation practice, see Size Your TiDB.

- For configurations of the dedicated cluster, see Create a TiDB Cluster. Configure the cluster size for TiDB, TiKV, and TiFlash (optional) respectively.

- For how to plan and optimize your PoC credits consumption effectively, see FAQ in this document.

- For more information about scaling, see Scale Your TiDB Cluster.

Once a dedicated PoC cluster is created, you are ready to load data and perform a series of tests. For how to connect to a TiDB cluster, see Connect to Your TiDB Cluster.

For a newly created cluster, note the following configurations:

- The default time zone (the Create Time column on the Dashboard) is UTC. You can change it to your local time zone by following Set the Local Time Zone.

- The default backup setting on a new cluster is full database backup on a daily basis. You can specify a preferred backup time or back up data manually. For the default backup time and more details, see Back up and Restore TiDB Cluster Data.

Step 4. Adapt your schemas and SQL

Next, you can load your database schemas to the TiDB cluster, including tables and indexes.

Because the amount of PoC credits is limited, to maximize the value of credits, it is recommended that you create a Developer Tier cluster (one-year free trial) for compatibility tests and preliminary analysis on TiDB Cloud.

TiDB Cloud is highly compatible with MySQL 5.7. You can directly import your data into TiDB if it is MySQL-compatible or can be adapted to be compatible with MySQL.

For more information about compatibilities, see the following documents:

- TiDB compatibility with MySQL.

- TiDB features that are different from MySQL.

- TiDB's Keywords and Reserved Words.

- TiDB Limitations.

Here are some best practices:

- Check whether there are inefficiencies in schema setup.

- Remove unnecessary indexes.

- Plan the partitioning policy for effective partitioning.

- Avoid hotspot issues caused by Right-Hand-Side Index Growth, for example, indexes on the timestamp.

- Avoid hotspot issues by using SHARD_ROW_ID_BITS and AUTO_RANDOM.

For SQL statements, you might need to adapt them depending on the level of your data source's compatibility with TiDB.

If you have any questions, contact PingCAP for consultation.

Step 5. Import data

You can import a small dataset to quickly test feasibility, or a large dataset to test the throughput of TiDB data migration tools. Although TiDB provides sample data, it is strongly recommended to perform a test with real workloads from your business.

You can import data in various formats to TiDB Cloud:

- Import sample data in the TiDB Dumpling format

- Migrate from Amazon Aurora MySQL

- Import CSV Files from Amazon S3 or GCS

- Import Apache Parquet Files

- For information about character collations supported by TiDB Cloud, see Migrate from MySQL-Compatible Databases. Understanding how your data is stored originally will be very helpful.

- Data import on the Data Import Task page does not generate additional billing fees.

Step 6. Run your workload and evaluate results

Now you have created the environment, adapted the schemas, and imported data. It is time to test your workload.

Before testing the workload, consider performing a manual backup, so that you can restore the database to its original state if needed. For more information, see Back up and Restore TiDB Cluster Data.

After kicking off the workload, you can observe the system using the following methods:

- The commonly used metrics of the cluster can be found on the cluster overview page, including Total QPS, Latency, Connections, TiFlash Request QPS, TiFlash Request Duration, TiFlash Storage Size, TiKV Storage Size, TiDB CPU, TiKV CPU, TiKV IO Read, and TiKV IO Write. See Monitor a TiDB Cluster.

- Go to Diagnosis > Statements, where you can observe SQL execution and easily locate performance problems without querying the system tables. See Statement Analysis.

- Go to Diagnosis > Key Visualizer, where you can view TiDB data access patterns and data hotspots. See Key Visualizer.

- You can also integrate these metrics to your own Datadog and Prometheus. See Third-party integrations.

Now it is time for evaluating the test results.

To get a more accurate evaluation, determine the metrics baseline before the test, and record the test results properly for each run. By analyzing the results, you can decide whether TiDB Cloud is a good fit for your application. Meanwhile, these results indicate the running status of the system, and you can adjust the system according to the metrics. For example:

Evaluate whether the system performance meets your requirements. Check the total QPS and latency. If the system performance is not satisfactory, you can tune performance as follows:

- Monitor and optimize the network latency.

- Investigate and tune the SQL performance.

- Monitor and resolve hotspot issues.

Evaluate the storage size and CPU usage rate, and scale out or scale in the TiDB cluster accordingly. Refer to the FAQ section for scaling details.

The following are tips for performance tuning:

Improve write performance

- Increase the write throughput by scaling out the TiDB clusters (see Scale a TiDB Cluster).

- Reduce lock conflicts by using the optimistic transaction model.

Improve query performance

- Check the SQL execution plan on the Diagnostic > Statements page.

- Check hotspot issues on the Dashboard > Key Visualizer page.

- Monitor if the TiDB cluster is running out of capacity on the Overview > Capacity Metrics page.

- Use the TiFlash feature to optimize analytical processing. See Use an HTAP Cluster.

Step 7. Explore more features

Now the workload testing is finished, you can explore more features, for example, upgrade and backup.

Upgrade

TiDB Cloud regularly upgrades the TiDB clusters, while you can also submit a support ticket to request an upgrade to your clusters. See Upgrade a TiDB Cluster.

Backup

To avoid vendor lock-in, you can use daily full backup to migrate data to a new cluster and use Dumpling to export data. For more information, see Export Data from TiDB.

Step 8. Clean up the environment and finish the PoC

You have completed the full cycle of a PoC after you test TiDB Cloud using real workloads and get the testing results. These results help you determine if TiDB Cloud meets your expectations. Meanwhile, you have accumulated best practices for using TiDB Cloud.

If you want to try TiDB Cloud on a larger scale, for a new round of deployments and tests, such as deploying with other node storage sizes offered by TiDB Cloud, get full access to TiDB Cloud by creating a Dedicated Tier.

If your credits are running out and you want to continue with the PoC, contact the TiDB Cloud Support for consultation.

You can end the PoC and remove the test environment anytime. For more information, see Delete a TiDB Cluster.

Any feedback to our support team is highly appreciated by filling in the TiDB Cloud Feedback form, such as the PoC process, the feature requests, and how we can improve the products.

FAQ

1. How long does it take to back up and restore my data?

TiDB Cloud provides two types of database backup: automatic backup and manual backup. Both methods back up the full database.

The time it takes to back up and restore data might vary, depending on the number of tables, the number of mirror copies, the CPU-intensive level, and so on. The backup and restoring rate in one single TiKV node is approximately 50 MB/s.

Database backup and restore operations are typically CPU-intensive, and always require additional CPU resources. They might have an impact (10% to 50%) on QPS and transaction latency, depending on how CPU-intensive this environment is.

2. When do I need to scale out and scale in?

The following are some considerations about scaling:

- During peak hours or data import, if you observe that the capacity metrics on the dashboard have reached the upper limits (see Monitor a TiDB Cluster), you might need to scale out the cluster.

- If you observe that the resource usage is persistently low, for example, only 10%-20% of CPU usage, you can scale in the cluster to save resources.

You can scale out clusters on the console by yourself. If you need to scale in a cluster, you need to contact TiDB Cloud Support for help. For more information about scaling, see Scale Your TiDB Cluster. You can keep in touch with the support team to track the exact progress. You must wait for the scaling operation to finish before starting your test because it can impact the performance due to data rebalancing.

3. How to make the best use of my PoC credits?

Once your application for the PoC is approved, you will receive credits in your account. Generally, the credits are sufficient for a 14-day PoC. The credits are charged by the type of nodes and the number of nodes, on an hourly basis. For more information, see TiDB Cloud Billing.

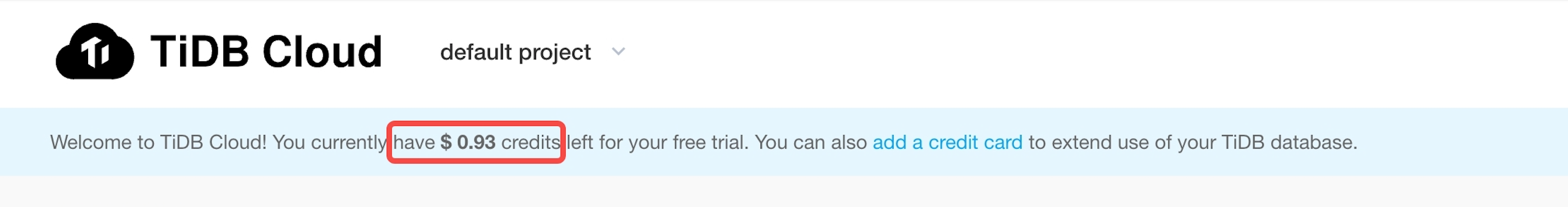

To check the credits left for your PoC, go to the Clusters page, as shown in the following screenshot.

Alternatively, you can also click the account name in the upper-right corner of the TiDB Cloud console, click Billing, and click Credits to see the credit details page.

To save credits, remove the cluster that you are not using. Currently, you cannot stop a cluster. You need to ensure that your backups are up to date before removing a cluster, so you can restore the cluster later when you want to resume your PoC.

If you still have unused credits after your PoC process is completed, you can continue using the credits to pay TiDB cluster fees as long as these credits are not expired.

4. Can I take more than 2 weeks to complete a PoC?

If you want to extend the PoC trial period or are running out of credits, contact PingCAP for help.

5. I'm stuck with a technical problem. How do I get help for my PoC?

You can always contact PingCAP for help.

- Overview of the PoC procedures

- Step 1. Define success criteria and create a test plan

- Step 2. Identify characteristics of your workload

- Step 3. Sign up and create a dedicated cluster for the PoC

- Step 4. Adapt your schemas and SQL

- Step 5. Import data

- Step 6. Run your workload and evaluate results

- Step 7. Explore more features

- Step 8. Clean up the environment and finish the PoC

- FAQ